The automotive industry is experiencing its biggest transformation in decades. The future isn’t just about electric power; it’s about intelligent, self-driving systems. AI agents are at the heart of this change, acting as the decision-making brains that make autonomous vehicles possible. These aren’t simple computer programs. They’re smart systems that sense their surroundings, make choices, and take action independently.

Key Takeaways

- AI agents handle complex tasks autonomously, from vehicle design to real-time driving decisions

- Multi-agent systems combine specialized components for perception, prediction, planning, and control

- Vehicle-to-everything communication enables coordination between vehicles and infrastructure

- Autonomous driving requires agents that can adapt, learn, and operate in unpredictable environments

- The shift from Level 2 to Level 3 autonomy marks the transition to truly intelligent systems

Understanding AI Agents in Modern Vehicles

The integration of AI into cars has moved beyond basic automation. We’re seeing a fundamental shift from systems that simply assist drivers to those that can handle the entire driving task. This change represents a new era where vehicles can adapt, learn, and make complex decisions without constant human input.

Early automotive AI focused on helping people, like improved dealership systems or basic voice commands. Today’s reality is different. Modern AI agents are goal-driven and capable of managing multi-step operations independently.

For drivers, this means vehicles that anticipate their needs. For manufacturers, it translates to assembly lines that fix their own problems and maintenance systems that prevent breakdowns before they happen. Full autonomy is being built one intelligent agent at a time.

AI Agents Across the Vehicle Lifecycle

The impact of AI agents starts long before a vehicle reaches the showroom. These intelligent systems transform how cars are designed, built, and maintained, leading to faster development, better quality, and lower costs.

Generative Design Agents

During the planning phase, specialized AI agents explore thousands of design possibilities based on engineering requirements like weight, strength, and material needs.

Their Role: These agents optimize components such as chassis parts or suspension brackets for maximum efficiency. They create lightweight, complex shapes that human engineers might never imagine, improving fuel economy or electric range. The agent makes sure every design meets all physical and manufacturing standards at once.

Manufacturing and Quality Control Agents

On the production line, autonomous agents maintain the precision and speed that modern manufacturing demands.

Their Responsibilities Include:

Predictive Maintenance: Agents analyze real-time information from factory robots and machines, tracking vibration, temperature, and power usage to predict equipment problems before they occur. This keeps production running smoothly and prevents expensive shutdowns.

Quality Assurance: High-speed vision systems inspect welds, paint finishes, and part placement with incredible accuracy. When they find a defect, these agents don’t just report it. They communicate with other production systems to adjust settings and correct the process immediately.

Supply Chain and Logistics Agents

Managing the global supply network for vehicle production involves tremendous complexity, from sourcing materials to coordinating component deliveries.

Their Function: Autonomous logistics agents constantly monitor worldwide conditions, material prices, shipping routes, and inventory levels. If they detect a disruption like a port closure or supply issue, the agent automatically recalculates the best sourcing and routing options, reducing risk and keeping production on schedule.

What Makes AI Agents Essential for Self-Driving Cars

In autonomous vehicles, AI agents are the software systems responsible for understanding the environment, making split-second decisions, and taking action to ensure safe, efficient travel. Unlike basic programs, these agents work in a continuous loop, coordinating across the vehicle and cloud services to manage everything from sensing to fleet operations.

The Core Operating Cycle

Automotive AI agents follow a fundamental “Sense-Think-Act” pattern:

Sense: Agents gather and combine information from multiple sensors including cameras, LiDAR, radar, vehicle communication systems, and GPS. They detect objects, classify them, and track movement, providing the essential input needed for driving decisions.

Think: Using advanced machine learning and language models, agents understand what’s happening around the vehicle and predict what other road users might do. They assess risks and plan the best path forward, breaking down complex driving goals into specific, actionable steps.

Act: The agent converts the chosen plan into precise vehicle commands, controlling steering, acceleration, and braking to execute maneuvers smoothly and securely.

Critical Features of Automotive AI Agents

The success of self-driving vehicles depends on agents having several essential capabilities:

Real-Time Processing: The ability to handle massive amounts of sensor data and make decisions in milliseconds is crucial for highway-speed driving.

Reliability: Agents must maintain performance even when facing unclear data, bad weather like rain or snow, or partial system problems.

Coordination: In systems with multiple specialized agents, each must communicate and work together harmoniously to manage the driving task.

Learning Capability: Agents must continuously improve from new experiences, simulation data, and fleet information to refine their models and enhance decision-making over time.

AI Agents vs Traditional Automation

The move to autonomous vehicles highlights an important difference between simple automation and true AI agents.

Traditional automation like basic cruise control uses fixed rules. It works in stable, predictable situations and needs structured information. It’s rigid and stops working if conditions change. It’s suitable for simple, repetitive tasks like keeping a steady speed.

AI agents are objective-driven. They handle changing, complex environments and raw sensor data. They can reason when things are uncertain and find alternative solutions. They’re capable of sophisticated, multi-step thinking like navigating a busy intersection.

Traditional automation is like a train on tracks. It’s fast and efficient but can’t change course. An AI agent is like an all-terrain vehicle with a destination. It perceives obstacles, plans routes, and adapts in real-time.

Levels of Driving Autonomy and AI Agents

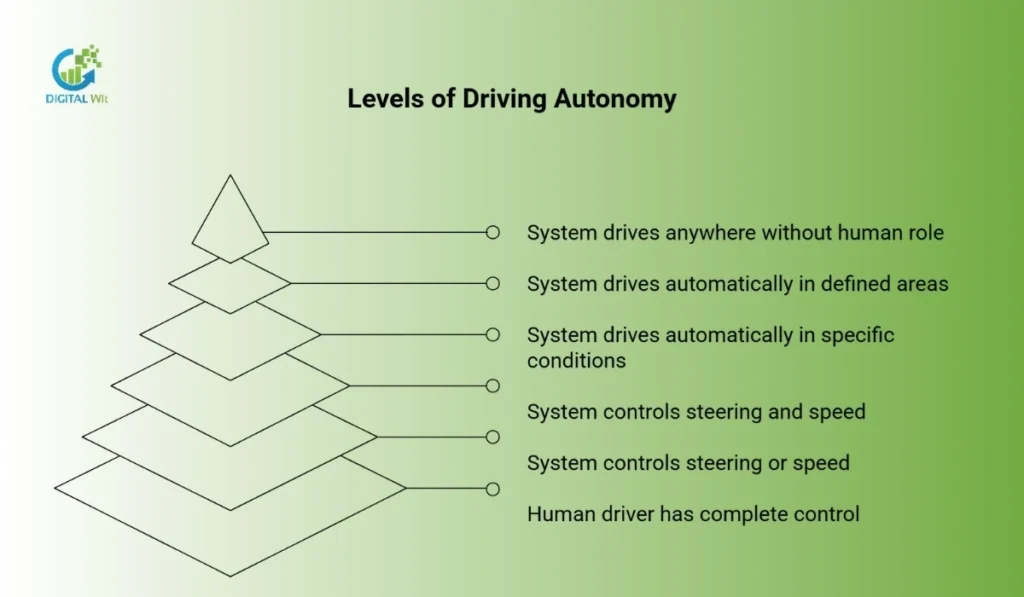

The automotive industry uses the SAE J3016 standard to define six levels of automation, which clearly show the difference between human and machine control. AI agents are the technology needed to move beyond Level 2.

Level 0 (No Automation): The human driver has complete control. No agent needed.

Level 1 (Driver Assistance): The system controls either steering or speed. The human monitors everything. Simple rule-based modules are enough.

Level 2 (Partial Automation): The system controls both steering and speed. The human must watch constantly. Coordinated simple modules handle advanced driver assistance.

Level 3 (Conditional Automation): The system drives automatically in specific conditions. The human must be ready to take over when asked. Highly coordinated AI agents manage the driving task.

Level 4 (High Automation): The system drives automatically in defined areas. No intervention needed. Fully autonomous agents with backup protection systems.

Level 5 (Full Automation): The system drives anywhere. No human role required. General-purpose, highly intelligent agent networks.

The jump from Level 2 to Level 3 is where true AI agents become necessary. At Level 3 and above, the vehicle, not the human, handles the driving task within specific conditions. This requires complex, goal-driven AI agents that can manage complete situational awareness and plan appropriate maneuvers without constant human supervision.

The Multi-Agent System: Building Complete Autonomy

Full autonomy at Level 4 and Level 5 isn’t achieved by a single AI program. Instead, it requires a carefully designed multi-agent system. In this setup, numerous specialized agents, each optimized for a specific task, must communicate and cooperate in real-time to manage driving. This collaborative structure is essential for handling the complexity and uncertainty of real-world conditions.

The primary agents in this system correspond to the classic functions of an autonomous vehicle:

Perception Agent

The perception agent is the critical first step in the sense-think-act cycle. Its goal is to transform raw, noisy sensor information into a clean, accurate picture of the vehicle’s surroundings.

Role: It combines sensor data from cameras, LiDAR, and radar, detects objects like vehicles, pedestrians, and cyclists, classifies them, and determines the vehicle’s exact position on a detailed map.

Technology: It relies heavily on deep learning models like neural networks to process images and sensor data in real-time. It builds an environment model that serves as input for the next agent.

Prediction Agent

The prediction agent handles one of the most challenging tasks: forecasting what will happen next. Its primary function is to predict the most likely behavior and path of every moving object the perception agent identifies.

Role: It assesses the intentions of other road users. Will the pedestrian step into the street? Is the car beside us about to change lanes? It generates multiple possible future paths for each object, modeling human behavior under various traffic rules and social patterns.

Importance: Accurate prediction is vital for protection. Without knowing what surrounding vehicles and people might do in the next few seconds, the planning system cannot create appropriate, conflict-free maneuvers.

Planning Agent

The planning agent acts as the vehicle’s central strategist and decision-maker. It takes information from the perception and prediction agents about the current situation and possible future scenarios and determines the best action sequence. Planning typically occurs in three layers:

Global Route Planning: Determines the high-level path from start to destination, such as which highways to use.

Behavior Planning: Decides the driving maneuver for the next few seconds, like “pass now,” “yield to the bus,” or “prepare to merge.” This balances objectives like protection, passenger comfort, and efficiency.

Local Trajectory Planning: Creates the final, collision-free, smooth, and physically possible path the vehicle must follow.

Control Agent

The control agent is the execution layer that translates the high-level trajectory from the planning agent into physical vehicle commands.

Role: It generates precise, frequent commands for the vehicle’s mechanical systems including the steering wheel, accelerator, and brakes.

Nature: While still an agent, the control system often uses traditional, reliable control methods to ensure mechanical execution is smooth and accurate, minimizing passenger discomfort.

Coordination Between Agents: Working Together

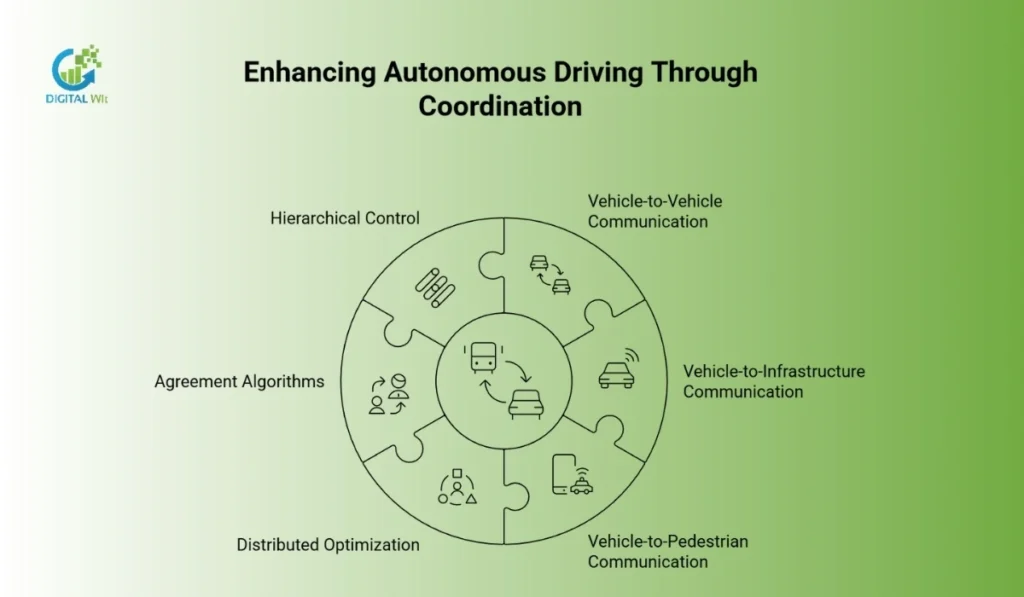

The smooth operation of a self-driving vehicle depends on perfect coordination among its internal agents. However, true efficiency is achieved when the vehicle’s agents also coordinate with external systems, leading to cooperative autonomous networks. This coordination is powered by vehicle-to-everything communication, creating shared awareness that exceeds what onboard sensors alone can provide.

The Role of Vehicle-to-Everything Communication

V2X is the digital foundation for external coordination, allowing agents to share critical information beyond line of sight. This shared data drastically reduces uncertainty and enables proactive, collective decision-making.

Vehicle-to-Vehicle: Agents communicate intentions like “I am about to brake or change lanes,” along with speed and position to nearby vehicles. This is essential for cooperative maneuvers like platooning, where multiple vehicles drive in close formation to improve fuel efficiency and increase road capacity.

Vehicle-to-Infrastructure: Agents receive real-time updates from road systems, such as traffic lights showing time remaining, construction zones, and dynamic speed limits. This allows for optimized speed recommendations and coordinated passage through intersections, minimizing stops and emissions.

Vehicle-to-Pedestrian: This allows agents to communicate with vulnerable road users equipped with smart devices, dramatically increasing perception capability in complex urban areas.

Coordination and Conflict Resolution

Since agents operate independently, each responsible for its own vehicle or task, they must use sophisticated methods to negotiate and resolve potential conflicts, particularly in shared spaces like merging ramps or intersections.

Distributed Optimization: Agents use algorithms based on game theory to predict interaction outcomes. For example, a vehicle merging onto a highway might broadcast its planned path, and the planning agent in the main highway vehicle will adjust its speed to create room, balancing the collective goal of traffic flow with individual protection.

Agreement Algorithms: In scenarios like platooning, all agents must agree on a shared state including speed and distance to maintain tight formation. Agreement algorithms ensure all vehicles in the group move as one virtual unit.

Hierarchical Control: The planning agent uses a hierarchy to manage internal and external conflicts. Global goals filter down to local decision-making, ensuring every maneuver, even one negotiated externally, contributes to the overall objective.

By integrating V2X data and employing these coordination methods, AI agents transform isolated autonomous vehicles into components of a collective, highly efficient, and more reliable traffic system.

Overcoming Obstacles: The Path Forward

Despite rapid progress, the road to widespread Level 4 and Level 5 autonomy faces significant technical, ethical, and regulatory hurdles that AI agents must overcome.

Current Technical Challenges

The present obstacles largely revolve around guaranteeing reliability in unpredictable real-world conditions:

The Rare Event Problem: Agents are trained on massive datasets, but unusual events like an unexpected animal on the road, uncommon construction situations, or debris that weren’t in the training data can cause problems. Developing agents that can reason about and handle novel situations remains a central research focus.

The Simulation-to-Reality Gap: Much agent training occurs in high-quality simulation. However, transferring learned policies from the perfect simulated world to imperfect reality, where sensor readings drift and delays exist, is a major barrier.

Explainability: When an AI planning agent makes a decision that leads to an incident, regulators and the public want to know why. The opaque nature of deep learning models makes this difficult. Future agents must be designed with explainable AI principles to provide clear, understandable justification for their actions.

Ethical and Social Considerations

The deployment of autonomous agents forces society to confront deeply complex moral and economic questions:

The Problem of Moral Programming: The famous trolley dilemma asking whether an agent should prioritize the life of the passenger over pedestrians in an unavoidable crash moves from a philosophical thought experiment to a programming requirement. Agents must be programmed with an explicit, agreed-upon moral framework, a societal challenge that extends far beyond engineering.

Data Privacy and Security: Autonomous agents continuously record massive amounts of information about the vehicle’s occupants, location, and surroundings. Securing this data from harmful actors and ensuring passenger privacy, especially with V2X sharing, is paramount.

Economic Impact: The widespread adoption of high-level autonomy will affect employment for professional drivers including truckers and taxi operators, requiring coordinated policy and planning for workforce transition.

The Future of AI Agents in Vehicles

As autonomous technology matures, AI agents will evolve beyond core driving tasks to manage a broader range of vehicle and ecosystem interactions, ushering in the next generation of smart mobility:

Personalized Agent Assistants: Agents will learn the unique preferences, schedules, and driving styles of passengers, becoming personalized chauffeurs that manage everything from in-vehicle environment settings to proactive scheduling.

Vehicle-to-Grid Optimization: In an electric future, AI agents will manage the vehicle’s battery as a distributed energy resource, negotiating with the smart grid to sell excess power or charge at optimal times, turning the vehicle into an active participant in the energy ecosystem.

The Fleet Management Agent: For mobility-as-a-service companies, specialized agents will manage entire fleets, optimizing routing, maintenance schedules, cleaning cycles, and rebalancing vehicles across a city based on real-time demand, maximizing efficiency and utilization.

Final Thoughts

The evolution of autonomous vehicles depends on the sophistication and coordination of AI agents. These intelligent systems represent the leap from simple automation to true cognitive mobility. The multi-agent architecture, composed of specialized perception, prediction, planning, and control systems working together via V2X communication, forms the foundation of more efficient transportation.

AI agents aren’t merely components of the autonomous vehicle. They are its brains. Their continued refinement promises to transform how we manufacture, commute, and connect. For businesses looking to navigate the digital landscape of this automotive revolution, partnering with experts is essential. Digital Wit, the best digital marketing agency in Bangladesh, helps companies communicate complex technological advances to their audiences effectively.

Frequently Asked Questions

How do AI agents ensure protection when making real-time decisions?

They operate using multiple layers of backup systems like a dedicated protection agent, risk assessment models, and pre-programmed parameters that prioritize collision avoidance and secure system degradation above all other objectives.

What type of data do AI agents need for accurate autonomous driving?

They require vast amounts of multi-modal sensor data from cameras, LiDAR, and radar, high-definition maps for localization, and V2X communication data for external coordination.

How do automotive companies train AI agents before deploying them?

Training involves a combination of massive-scale simulation covering billions of virtual miles, road testing on closed tracks and public roads, and continuously collecting and labeling real-world fleet data to refine models.

Can AI agents operate effectively in challenging weather conditions?

This is a major challenge. Agents address weather effects by relying heavily on sensor fusion, combining data from high-penetration sensors like radar and thermal imaging to compensate when visual sensors like cameras and LiDAR are compromised. Performance continues to improve through ongoing research.

What regulatory standards control the use of AI agents in vehicles?

The primary international standard is the SAE J3016 classification covering Levels 0 through 5, which defines the level of automation. Specific regulations vary by region but focus on stringent validation, data security, and operational compliance.

Can AI agents learn from each other’s experiences?

Yes. Fleet learning allows multiple vehicles to share anonymized experiences, so when one vehicle encounters a new situation, others can benefit from that knowledge without experiencing it directly.